Pytorch如何保存训练好的模型

1、(1)只保存模型参数字典(推荐)

代码:

#保存

torch.save(the_model.state_dict(), PATH)

#读取

the_model = TheModelClass(*args, **kwargs)

the_model.load_state_dict(torch.load(PATH))

2、(2)保存整个模型

代码:

#保存

torch.save(the_model, PATH)

#读取

the_model = torch.load(PATH)

1、pytorch会把模型的参数放在一个字典里面,而我们所要做的就是将这个字典保存,然后再调用。比如说设计一个单层LSTM的网络,然后进行训练,训练完之后将模型的参数字典进行保存,保存为同文件夹下面的rnn.pt文件:

代码:

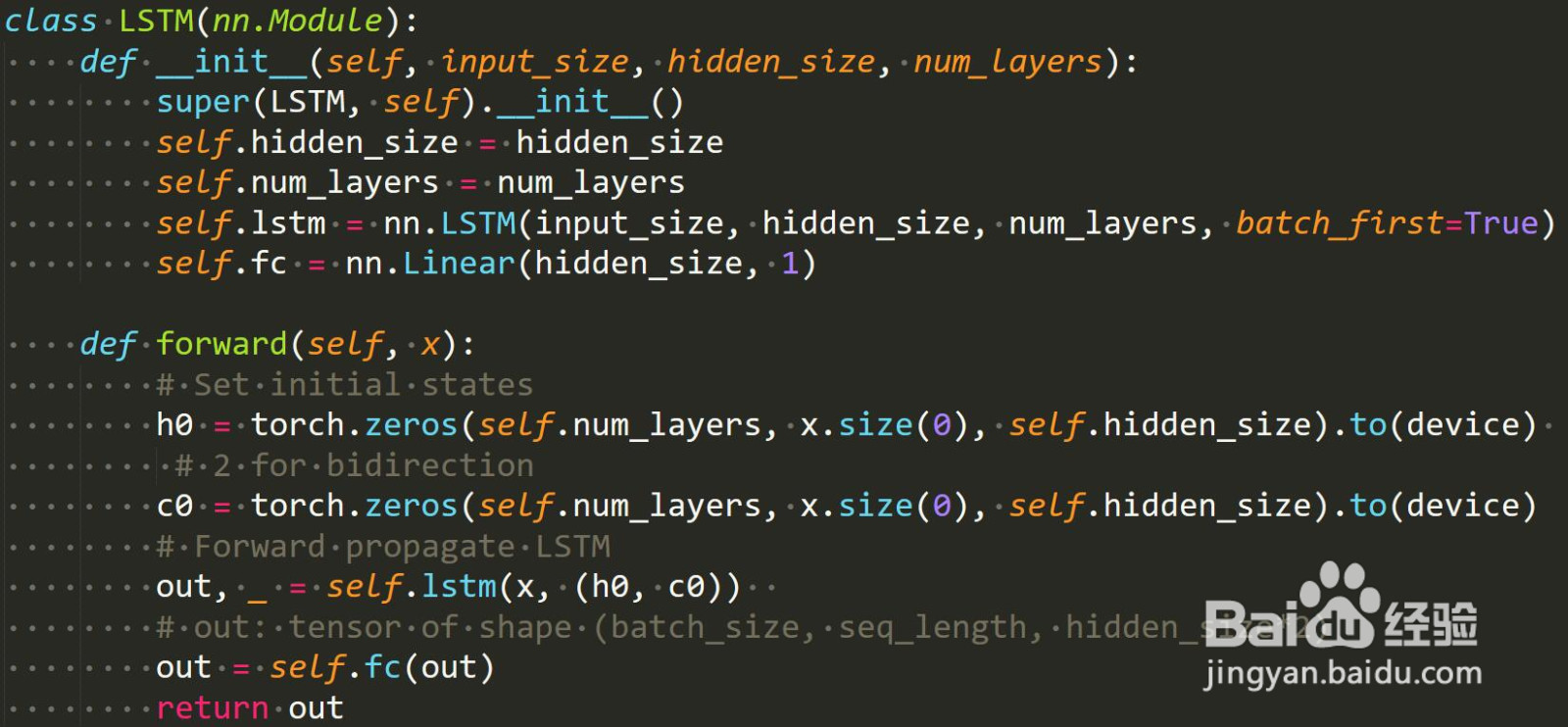

class LSTM(nn.Module):

def __init__(self, input_size, hidden_size, num_layers):

super(LSTM, self).__init__()

self.hidden_size = hidden_size

self.num_layers = num_layers

self.lstm = nn.LSTM(input_size, hidden_size, num_layers, batch_first=True)

self.fc = nn.Linear(hidden_size, 1)

def forward(self, x):

# Set initial states

h0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device)

# 2 for bidirection

c0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device)

# Forward propagate LSTM

out, _ = self.lstm(x, (h0, c0))

# out: tensor of shape (batch_size, seq_length, hidden_size*2)

out = self.fc(out)

return out

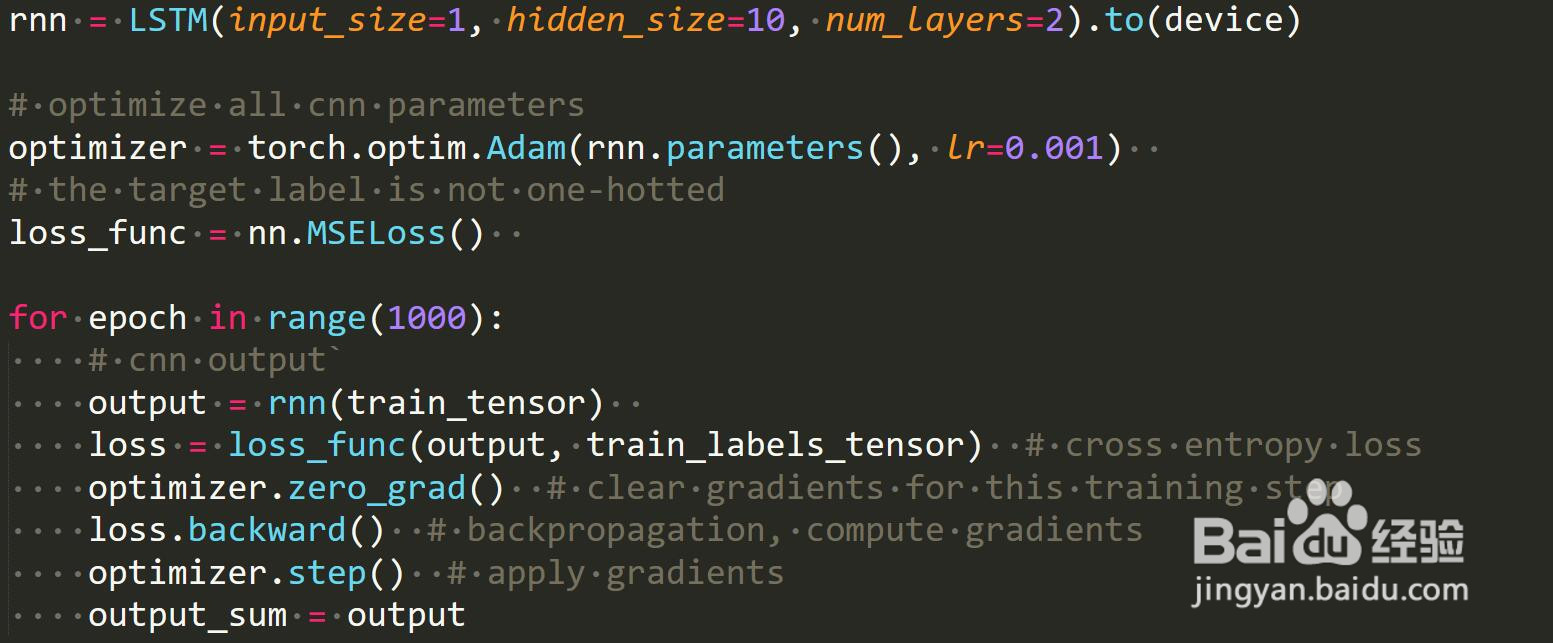

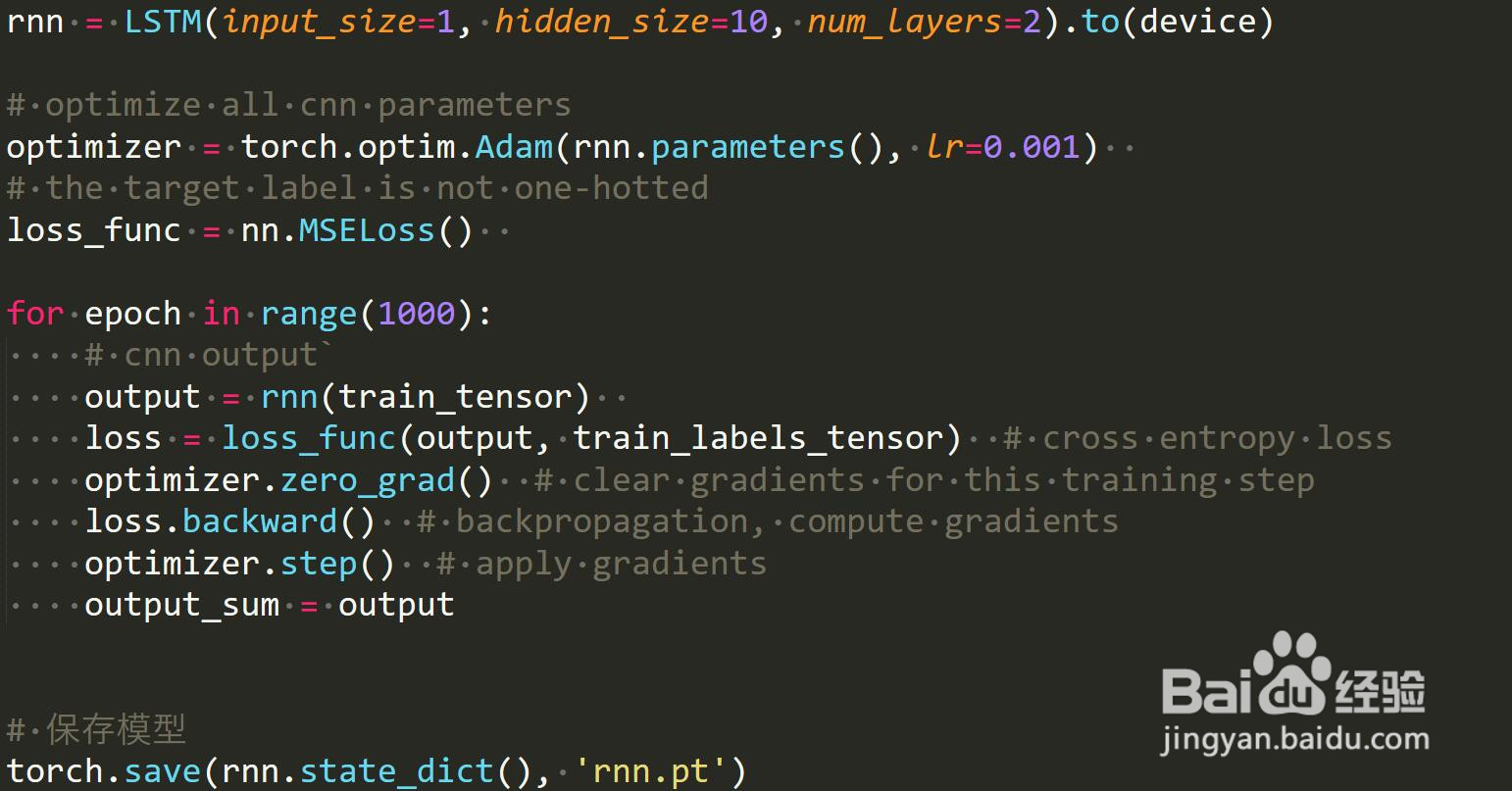

rnn = LSTM(input_size=1, hidden_size=10, num_layers=2).to(device)

# optimize all cnn parameters

optimizer = torch.optim.Adam(rnn.parameters(), lr=0.001)

# the target label is not one-hotted

loss_func = nn.MSELoss()

for epoch in range(1000):

output = rnn(train_tensor) # cnn output`

loss = loss_func(output, train_labels_tensor) # cross entropy loss

optimizer.zero_grad() # clear gradients for this training step

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

output_sum = output

# 保存模型

torch.save(rnn.state_dict(), 'rnn.pt')

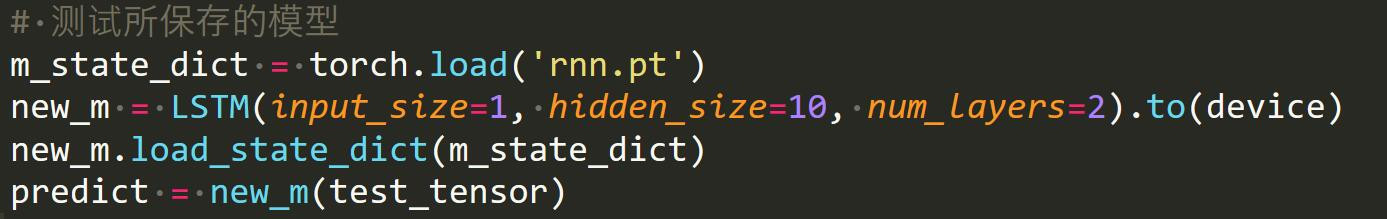

2、保存完之后利用这个训练完的模型对数据进行处理:

代码:

# 测试所保存的模型

m_state_dict = torch.load('rnn.pt')

new_m = LSTM(input_size=1, hidden_size=10, num_layers=2).to(device)

new_m.load_state_dict(m_state_dict)

predict = new_m(test_tensor)

3、这里做一下说明,在保存模型的时候rnn.state_dict()表示rnn这个模型的参数字典,在测试所保存的模型时要先将这个参数字典加载一下:

m_state_dict = torch.load('rnn.pt');

然后再实例化一个LSTM对像,这里要保证传入的参数跟实例化rnn是传入的对象时一样的,即结构相同:

new_m = LSTM(input_size=1, hidden_size=10, num_layers=2).to(device);

下面是给这个新的模型传入之前加载的参数:

new_m.load_state_dict(m_state_dict);

最后就可以利用这个模型处理数据了:

predict = new_m(test_tensor)

1、代码:

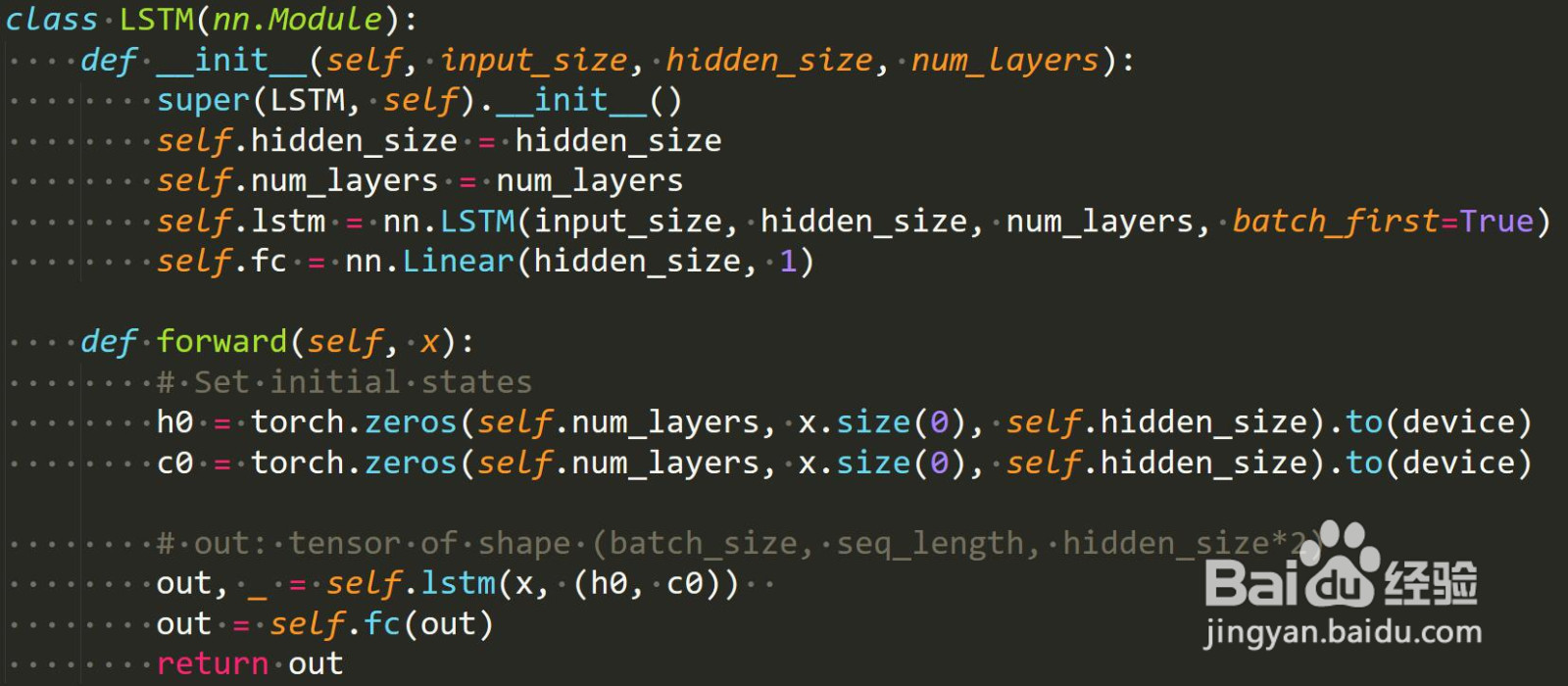

class LSTM(nn.Module):

def __init__(self, input_size, hidden_size, num_layers):

super(LSTM, self).__init__()

self.hidden_size = hidden_size

self.num_layers = num_layers

self.lstm = nn.LSTM(input_size, hidden_size, num_layers, batch_first=True)

self.fc = nn.Linear(hidden_size, 1)

def forward(self, x):

# Set initial states

h0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device) # 2 for bidirection

c0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device)

# Forward propagate LSTM

out, _ = self.lstm(x, (h0, c0)) # out: tensor of shape (batch_size, seq_length, hidden_size*2)

# print("output_in=", out.shape)

# print("fc_in_shape=", out[:, -1, :].shape)

# Decode the hidden state of the last time step

# out = torch.cat((out[:, 0, :], out[-1, :, :]), axis=0)

# out = self.fc(out[:, -1, :]) # 取最后一列为out

out = self.fc(out)

return out

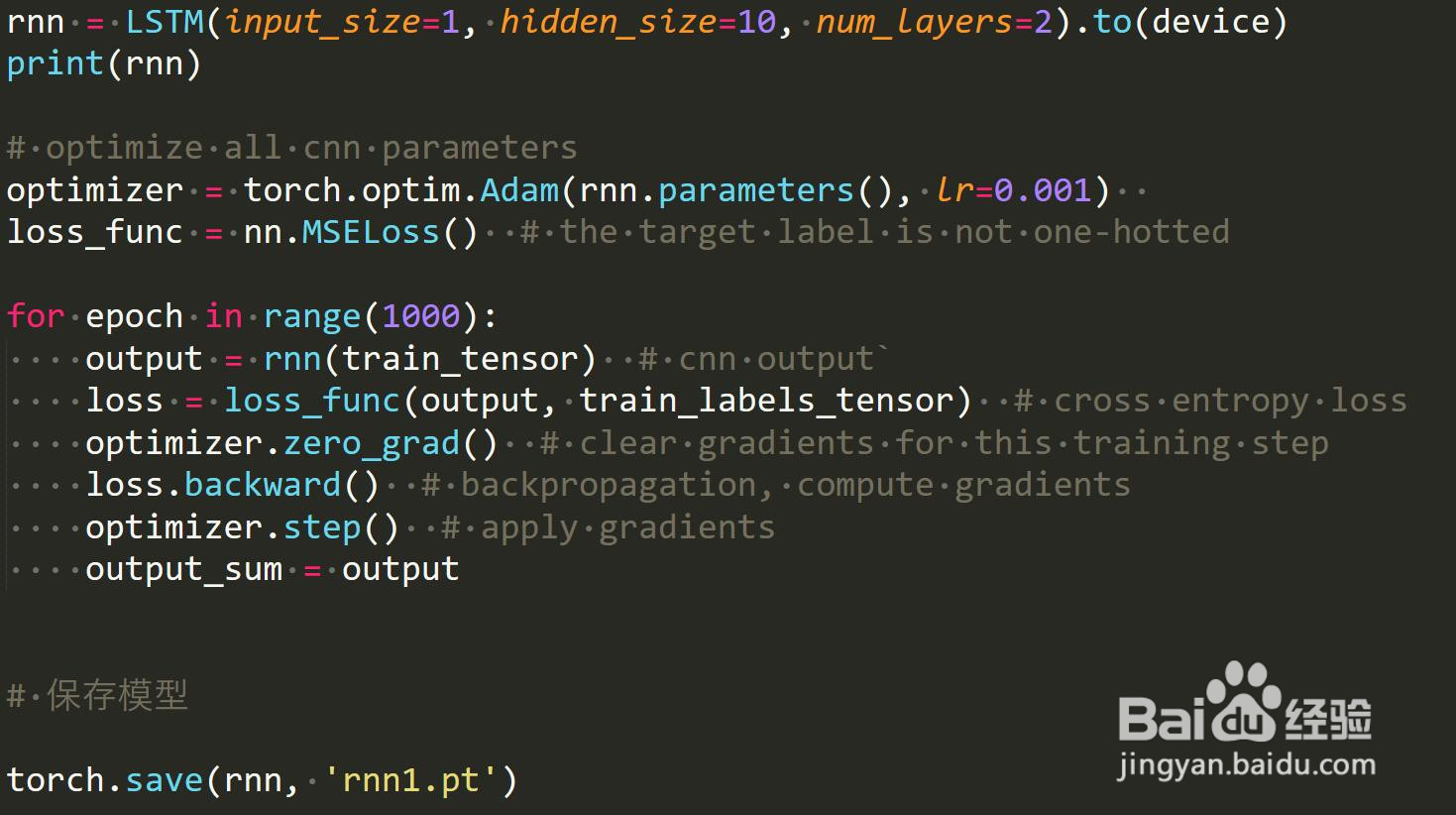

rnn = LSTM(input_size=1, hidden_size=10, num_layers=2).to(device)

print(rnn)

optimizer = torch.optim.Adam(rnn.parameters(), lr=0.001) # optimize all cnn parameters

loss_func = nn.MSELoss() # the target label is not one-hotted

for epoch in range(1000):

output = rnn(train_tensor) # cnn output`

loss = loss_func(output, train_labels_tensor) # cross entropy loss

optimizer.zero_grad() # clear gradients for this training step

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

output_sum = output

# 保存模型

torch.save(rnn, 'rnn1.pt')

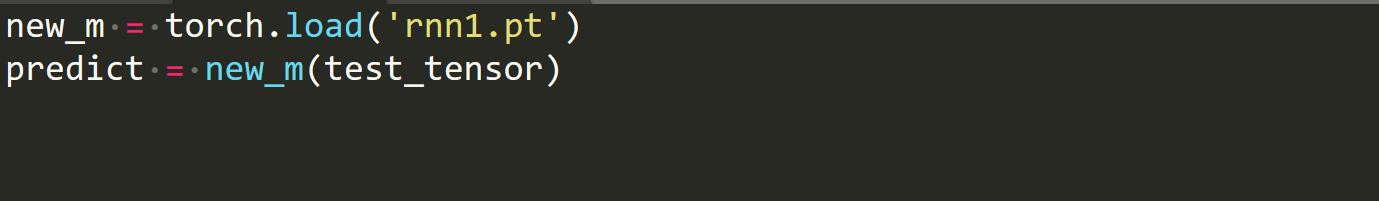

2、保存完之后利用这个训练完的模型对数据进行处理:

代码:

new_m = torch.load('rnn1.pt')

predict = new_m(test_tensor)

声明:本网站引用、摘录或转载内容仅供网站访问者交流或参考,不代表本站立场,如存在版权或非法内容,请联系站长删除,联系邮箱:site.kefu@qq.com。

阅读量:95

阅读量:81

阅读量:91

阅读量:20

阅读量:64